Huawei DNN Compression&Acceleration Technical Challenge. Uniform Quantizers

Dear students of MSU [Faculty],

Huawei Russian Research Institute invites you to Tech Talk with Huawei R&D for students of MSU [Faculty]!

When: 23.03.2022, 16:00 – 18:00

Where: [Faculty] of Moscow State University, room. ___

As part of this event, we invite you to participate in the competition by solving the problem from Kirill Solodskikh, the main speaker of the event, engineer of the Huawei Russian Research Institute!

For a successful solution of the problem you will receive:

1st place – HUAWEI WATCH 3 Pro

2nd place – HUAWEI Freebuds Pro

3rd place – HUAWEI WATCH FIT /product/49/670432/3.jpg?6124)

Mean Squared Quantization Error

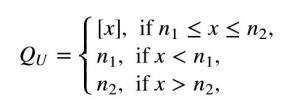

Let us consider uniform quantization function defined in the following way:

where – quantization segment, , – rounding of . Function rounds to the closest integer value which lies in segment . To adjust quantization segment for arbitrary data scales one could use quantization scale parameter in the following way . For instance, if some random variable lies in and we want represent these values using uint8 data type ( ) for scale parameter we can take ![]() . Resulted quantized random variable now presented as categorical random variable with uint8 data type scaled by , i.e. . But how to measure what representation is better?

. Resulted quantized random variable now presented as categorical random variable with uint8 data type scaled by , i.e. . But how to measure what representation is better?

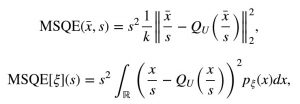

To answer on this question we need to define some metric which shows how close quantized representation ξQ to initial one. One of the natural metrics is Mean Squared Quantization Error (MSQE):

where first line defines quantization error for vectors and the second for continuous random variables with density function pξ.

Smooth Quantization Error

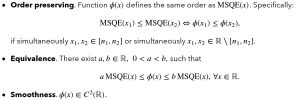

It is easy to see that function Qu has almost zero derivative what makes impossible to use it in smooth optimization algorithms as gradient descent. Let us define the family of smooth quantization errors. We will say that function ϕ(x):ℝ→ℝ iff:

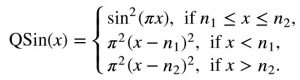

The simple example of Smooth Quantization Error is the squared sinus wave glued together with square function. We will call this function as quantization sinus or simply QSin:

Tasks for challenge

Theoretical tasks

- Prove that QSin is a smooth quantization error.

- Prove that any smooth quantization error (SQE) has the same number of local minimas as MSQE.

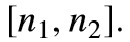

- Prove that any SQE periodic on segment

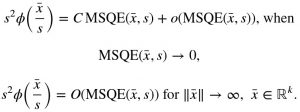

- (*) Prove that for any SQE ϕ the following holds:

and find such constant for QSin.

1. (*) Prove that for any random variable ξ with smooth density pξ (x) with finite first and second moments there exists at least one minima for the following optimization problems:

ϕ[ξ](s)→min,MSQE[ξ](s)→min,

![]()

Coding tasks

1. Implement MSQE and QSin as loss functions in PyTorch.

2. By sampling from standard normal / laplacian distribution plot the functions QSin[ξ](s),MSQE[ξ](s) for the following quantization segments: [-128, 127], [-8, 7], [-2, 1].

Please send your solution in pdf/doc. format, responding to this topic.

DEADLINE FOR SUBMISSION YOUR SOLUTION TO THE PROBLEM: MARCH, 18